AI Infrastructure Race Defines Next Competitive Winners and Losers

Episode Summary

STRATEGIC PATTERN ANALYSIS Pattern One: The Infrastructure Sovereignty Race The most consequential development this week isn't any single acquisition or product launch. It's the crystallization o...

Full Transcript

STRATEGIC PATTERN ANALYSIS

Pattern One: The Infrastructure Sovereignty Race

The most consequential development this week isn't any single acquisition or product launch. It's the crystallization of a new competitive paradigm: AI leadership is now fundamentally an infrastructure control game, and the winners are those who secure end-to-end sovereignty over the computational stack. Consider what happened.

Google locked in Energy Dome's CO2 battery technology for data center power. Nvidia paid twenty billion dollars for Groq's inference architecture. Meta spent over two billion on Manus to own the agent execution layer.

These aren't disconnected moves. They're coordinated plays in a race to control every chokepoint in the AI value chain, from electrons to end-user outcomes. The strategic significance extends far beyond the obvious.

We've moved past the era where model quality was the differentiator. The frontier labs have largely converged on capability. GPT-4 class reasoning is now reproducible for five million dollars, as DeepSeek demonstrated.

The new battleground is infrastructure: who can run models faster, cheaper, and more reliably at scale. This connects directly to the memory architecture breakthrough embedded in the Nvidia-Groq deal. Groq solved inference as a memory physics problem rather than a compute problem.

That technical insight matters because inference now represents seventy percent of AI workload costs. Nvidia didn't just acquire a competitor; they acquired the architectural paradigm that defines the next phase of AI economics. What this signals is profound.

The AI industry is entering a vertical integration phase reminiscent of Standard Oil or early automotive manufacturing. Companies that control generation, storage, compute, inference, and application layers will have structural advantages that pure-play AI labs cannot match. The window for horizontal specialization is closing rapidly.

Pattern Two: The Agent Inflection Point

Meta's Manus acquisition, combined with OpenAI's app store launch and Anthropic's continued struggles with reliable execution, marks a decisive shift from conversational AI to transactional AI. We're witnessing the moment when AI moves from answering questions to completing work. The strategic importance here is often understated.

Manus hit one hundred million dollars in annual revenue eight months after launch. That velocity reveals something fundamental: enterprises and consumers will pay meaningful premiums for AI that actually does things rather than AI that just talks about doing things. The monetization gap between chatbots and agents is becoming visible in real revenue numbers.

This connects to multiple threads from the week. The Wall Street Journal's demonstration of Claude failing the shopkeeper test wasn't just amusing journalism. It highlighted the reliability gap that separates demos from deployable systems.

Manus succeeded precisely because they solved for the last five percent of task completion that makes agents usable in production. Meta didn't buy a model; they bought proven reliability infrastructure. The agent inflection also connects to OpenAI's hardware pivot toward screenless, voice-first devices.

These aren't just new form factors. They're purpose-built interfaces for agent interaction. When you're delegating tasks rather than typing queries, a conversational audio interface becomes natural.

OpenAI is designing hardware for a post-screen, agent-centric interaction paradigm. What this signals for AI evolution is significant. The agent layer is becoming the new control point for digital commerce and workflow automation.

Whoever owns the most reliable agent infrastructure owns the gateway between users and services. That's why Meta paid a four-times premium over Manus's recent valuation. They're not buying current revenue; they're buying future platform leverage across three billion users.

Pattern Three: The Efficiency Revolution Matures

DeepSeek's five-million-dollar training run, Nvidia's stock drop of eighteen percent on that news, and the proliferation of efficiency techniques like KV caching and Factory.ai's ninety-nine percent compression represent something bigger than cost optimization. They signal that the compute-intensive phase of AI development is ending, and an efficiency-intensive phase is beginning.

This matters strategically because it fundamentally changes who can compete. When training a frontier model required billions of dollars, only a handful of organizations could play. Now that comparable capabilities are achievable for single-digit millions, the competitive landscape fragments.

Chinese labs like Z.ai are hitting seventy percent on SWE-bench with open-source models. The Western hardware advantage is eroding faster than export controls can maintain it.

The connection to the Groq acquisition becomes clear through this lens. Nvidia isn't just buying inference technology; they're positioning for a world where training costs commoditize and inference efficiency becomes the margin-generating business. The Groq architecture's deterministic inference solves exactly the problems that matter in an efficiency-driven market: predictable costs, consistent latency, and lower energy consumption per token.

This efficiency revolution also connects to the infrastructure sovereignty pattern. Google's CO2 battery investment makes more sense when you understand that energy efficiency compounds with computational efficiency. A more efficient model running on more efficient hardware powered by more efficient storage creates multiplicative advantages that accumulate over time.

What this signals is that we're past the point where throwing more compute at problems guarantees better outcomes. The next generation of AI leadership will be determined by who extracts the most capability per dollar, per watt, per token. That's a fundamentally different optimization target than raw scale, and it favors different organizational capabilities.

Pattern Four: The Content Quality Crisis Accelerates

YouTube's recommendation algorithm now serves twenty-one percent AI-generated content to new users. Research shows that AI-generated labels reduce click-through rates by thirty-one percent. These data points together reveal a growing tension between AI's production capabilities and human quality expectations.

The strategic significance is that we're approaching a content authenticity crisis that will reshape digital markets. Platform algorithms optimizing for engagement are increasingly serving AI-generated content that users actively reject when labeled. This creates perverse incentives: platforms benefit from hiding AI provenance while regulators push for transparency.

This connects to the agent developments in an unexpected way. As agents become capable of completing content creation tasks autonomously, the volume of AI-generated content will accelerate dramatically. Meta's integration of Manus across their platforms could theoretically generate content at scales that dwarf current AI slop.

The quality control challenge becomes existential for platforms built on user-generated content. The connection to Meta's regulatory exposure is also relevant. Internal documents revealed the company deliberately hid scam ads to avoid a two-billion-dollar verification system.

If platforms are already hiding problematic content from regulators, what happens when AI-generated content scales by orders of magnitude? The compliance and trust infrastructure isn't remotely ready. What this signals is that content authentication is becoming critical infrastructure.

The companies that solve provenance, quality verification, and authenticity at scale will capture enormous value. This might be the next major infrastructure layer after the compute and agent layers currently being contested.

CONVERGENCE ANALYSIS

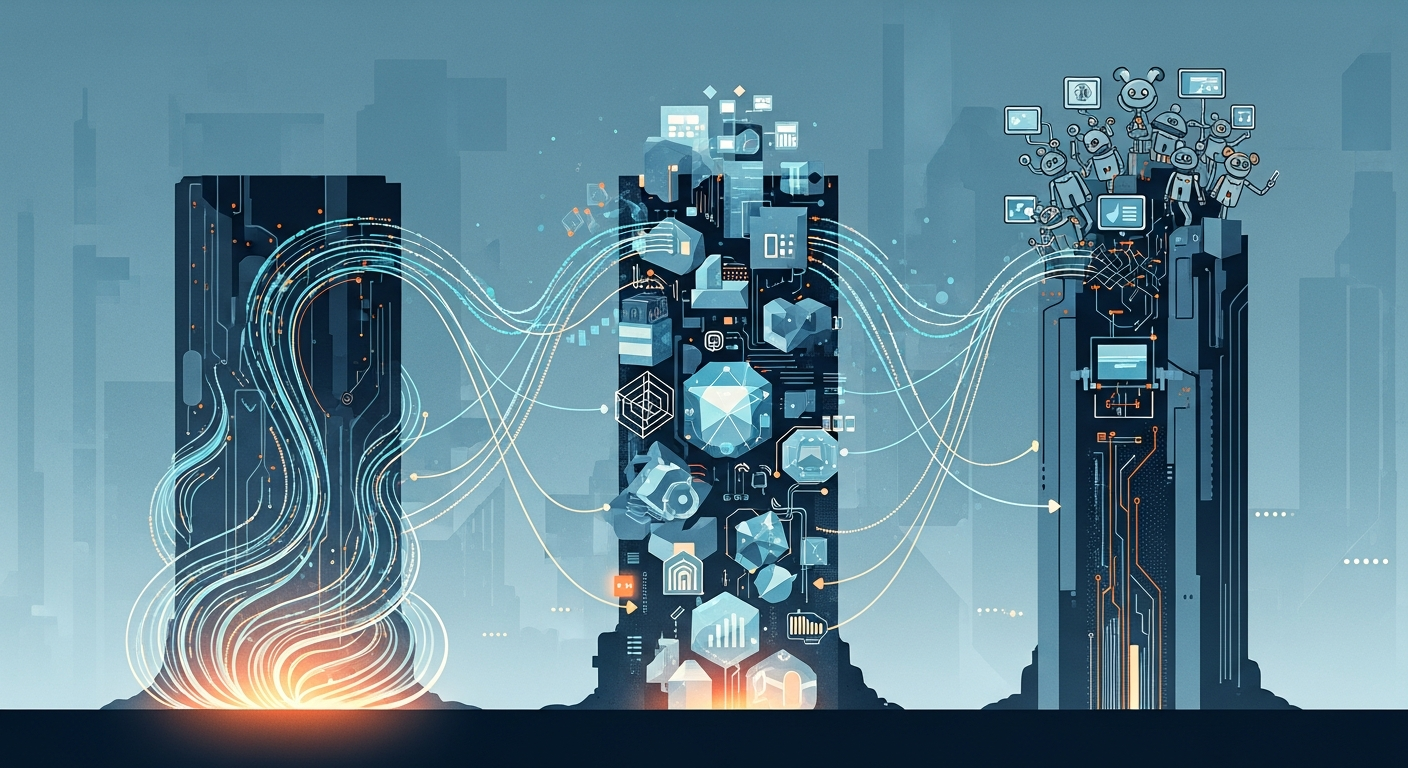

Systems Thinking: The Emerging AI Stack When you view this week's developments as interconnected rather than isolated, a coherent architecture emerges. We're witnessing the construction of a new technology stack where each layer depends on and reinforces the others. At the base sits power infrastructure.

Google's CO2 battery investment addresses the fundamental constraint: AI computation requires stable, cheap, carbon-accountable electricity. Without solving power, nothing else scales. This isn't just about data centers; it's about enabling the physical expansion of AI capability.

Above power sits compute architecture. Nvidia's Groq acquisition addresses the memory and inference bottlenecks that limit how efficiently models run. The shift from dynamic GPU scheduling to deterministic SRAM-based inference represents a fundamental rethinking of computational physics for AI workloads.

Above compute sits the agent layer. Meta's Manus acquisition addresses the gap between model capability and task completion. This layer orchestrates multi-step workflows, manages state across interactions, and delivers outcomes rather than outputs.

Above agents sits the interface layer. OpenAI's hardware pivot toward screenless, voice-first devices addresses how humans interact with autonomous systems. This layer defines the modality through which people delegate work to AI.

And threading through all layers is efficiency optimization. DeepSeek's training economics, KV caching, compression techniques, and model distillation all reduce resource requirements at every layer of the stack. The emergent pattern is vertical integration across the entire stack.

The companies positioning for long-term advantage are those securing control at multiple layers simultaneously. Google has power, compute, and models. Nvidia has compute and now inference architecture.

Meta has distribution, agents, and soon interfaces. OpenAI has models, interfaces, and hardware partnerships. The companies trying to compete at single layers face structural disadvantage against vertically integrated stacks.

Competitive Landscape Shifts The combined effect of these developments creates clear winners and losers in the strategic landscape. The hyperscalers emerge as the primary beneficiaries. Google, Microsoft, Amazon, and Meta have the capital to invest across multiple stack layers simultaneously.

They can absorb ten-figure acquisitions, deploy novel power infrastructure, and integrate everything with existing distribution. The moat around this position is widening. Pure-play AI labs face increasing structural challenges.

Anthropic, Cohere, and similar companies compete on model quality, but model quality is commoditizing. Without control over infrastructure layers, they depend on hyperscalers for compute, distribution, and increasingly, agent reliability. Their strategic options narrow to either acquisition or finding defensible niches the giants ignore.

AI chip startups saw their competitive window shrink dramatically. Nvidia's Groq acquisition signals aggressive consolidation. Companies like Cerebras, SambaNova, and Graphcore must now recalculate whether independent operation remains viable.

The patent implications from Groq's architecture could create legal barriers to alternative approaches. Enterprise software vendors face existential disruption. If Meta's agents can book travel, why does Expedia's app matter?

If OpenAI's devices can transcribe and organize notes, what happens to productivity suites? The agent layer threatens to commoditize entire software categories by making the interface conversational and the execution automated. Chinese AI developers gained unexpected momentum.

DeepSeek's efficient training, Z.ai's competitive open-source models, and the massive chip orders flowing to Chinese companies despite export controls demonstrate that hardware advantages aren't decisive. The efficiency revolution democratizes capability in ways that benefit resource-constrained competitors.

Market Evolution Several new market opportunities emerge from these convergent developments. The AI infrastructure services market is crystallizing rapidly. Companies that can provide turnkey power-plus-compute solutions for AI workloads will capture significant value.

This isn't just about building data centers; it's about delivering guaranteed availability, carbon accounting, and predictable costs. The gap between what hyperscalers have and what enterprises can access creates opportunity for managed infrastructure providers. Agent reliability infrastructure represents an underappreciated market.

Manus succeeded because they solved task completion at scale. Other companies need similar capabilities but can't build from scratch. Tools for monitoring agent performance, recovering from failures, and ensuring quality execution will become essential enterprise software.

Think observability platforms for autonomous systems. Content authentication services are becoming necessary infrastructure. As AI-generated content floods platforms and regulatory pressure increases for transparency, services that verify provenance, detect AI generation, and certify authenticity will command premium pricing.

This intersects with trust, compliance, and brand safety in ways that create large addressable markets. Voice-first application development represents a greenfield opportunity. OpenAI's hardware pivot signals that audio interfaces are becoming primary rather than secondary.

Developers who build voice-native applications rather than voice-enabled traditional apps will have structural advantages. The tooling, frameworks, and design patterns for this paradigm barely exist yet. Energy technology aligned with AI requirements becomes a distinct market category.

CO2 batteries, long-duration storage, and grid-scale solutions optimized for computational workloads aren't traditional utility investments. They require understanding AI demand patterns, hyperscaler procurement processes, and the specific reliability requirements of training and inference workloads. Technology Convergence Unexpected intersections between AI domains became visible this week.

Energy and AI are converging in ways that weren't obvious eighteen months ago. The constraint on AI scaling shifted from algorithms to electricity. This means energy innovation directly enables AI capability expansion.

Companies working on grid technology, storage, or generation are now AI infrastructure companies whether they realize it or not. Hardware and software interfaces are merging through voice. OpenAI's screenless devices, combined with their new audio architecture, represent a convergence of consumer electronics, speech AI, and assistant capability.

The traditional boundary between device and service dissolves when the hardware is purpose-built for a specific AI experience. Inference and networking are converging through deterministic architecture. Groq's approach treats inference more like packet switching than traditional computation, with predictable latency and guaranteed timing.

This opens possibilities for distributed inference architectures that couldn't work with variable GPU performance. Edge computing for AI becomes more viable when you can guarantee response times. Agents and commerce are converging faster than payment infrastructure anticipated.

When AI completes transactions on behalf of users, questions about authorization, liability, and fraud prevention require new answers. Payment networks, authentication systems, and financial regulation all need to adapt to agent-mediated commerce. Strategic Scenario Planning Given these combined developments, executives should prepare for three plausible scenarios.

**Scenario One: Rapid Consolidation** In this scenario, the vertical integration trend accelerates. Within eighteen months, four or five companies control end-to-end AI infrastructure from power to application. Acquisitions eliminate independent alternatives across the stack.

Enterprises face an oligopoly similar to cloud computing, but more entrenched because switching costs span hardware, software, and energy contracts simultaneously. Under this scenario, executives should prioritize multi-cloud AI strategies now, before lock-in becomes inevitable. Negotiate contract flexibility aggressively.

Invest in portability layers that abstract away infrastructure dependencies. Build internal capabilities that preserve optionality even as external options narrow. **Scenario Two: Efficiency-Driven Democratization** In this scenario, the efficiency revolution outpaces consolidation.

Open-source models running on commodity hardware achieve capability parity with proprietary systems. Training costs continue dropping, putting frontier capabilities within reach of well-funded startups and academic institutions. Infrastructure becomes a utility rather than a moat.

Under this scenario, executives should invest heavily in model customization and application-layer differentiation. If infrastructure commoditizes, value migrates to domain expertise, proprietary data, and workflow optimization. Build teams capable of fine-tuning and deploying open models rather than depending on vendor relationships for capability.

**Scenario Three: Fragmented Specialization** In this scenario, no single stack achieves dominance, but specialized excellence emerges at each layer. Different providers win in power, compute, inference, agents, and interfaces. Integration becomes the challenge, creating opportunity for middleware and orchestration platforms that connect best-of-breed components.

Under this scenario, executives should develop strong vendor evaluation capabilities and integration expertise. The winners will be organizations that can assemble optimal stacks from diverse providers and adapt as the landscape evolves. Invest in technical architecture that enables component substitution without full rebuilds.

The probability weighting across these scenarios matters less than preparedness for each. The executives who navigate the next eighteen months successfully will be those who built optionality rather than making concentrated bets on single outcomes. The AI landscape is evolving too rapidly for prediction; it's not evolving too rapidly for preparation.

Never Miss an Episode

Subscribe on your favorite podcast platform to get daily AI news and weekly strategic analysis.