Sam Altman Claims OpenAI Built AGI, Microsoft Pushes Back Hard

Episode Summary

TOP NEWS HEADLINES Let's kick off with today's biggest stories. Sam Altman just dropped a bombshell in his latest Forbes profile, revealing OpenAI has a succession plan to eventually hand company ...

Full Transcript

TOP NEWS HEADLINES

Sam Altman just dropped a bombshell in his latest Forbes profile, revealing OpenAI has a succession plan to eventually hand company leadership to an AI model.

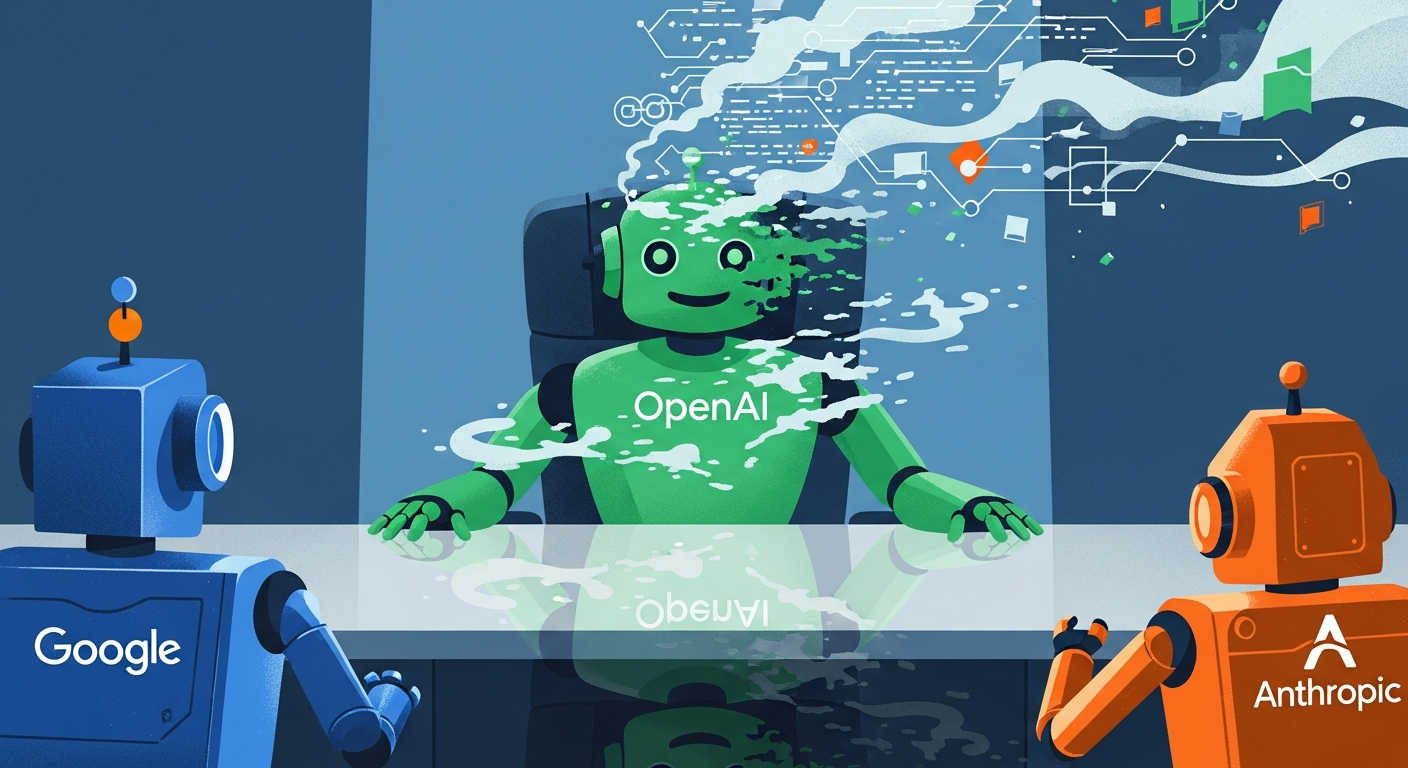

He's claiming they've "basically built AGI," though Microsoft CEO Satya Nadella immediately pushed back on that assertion, calling their relationship "frenemies." Meanwhile, employees are privately worried the company is trying to do too much, too quickly.

OpenAI is officially testing ads in ChatGPT this month, following Meta's playbook from the early Facebook days.

The ad push aims to fund OpenAI's massive burn rate while expanding free access, with premium rates for early advertisers.

The shift marks a dramatic pivot for Altman, who called AI chatbot ads "uniquely unsettling" just 16 months ago.

NASA's Perseverance rover completed its first autonomous drives on Mars planned entirely by Claude AI, covering nearly 1,500 feet without human-planned waypoints.

The breakthrough solves the 20-minute communication delay problem and could cut mission planning time in half.

Apple launched Xcode 26.3 with full Claude Agent SDK integration, giving millions of iOS developers autonomous AI coding capabilities.

The killer feature is visual verification where Claude can capture previews, identify issues, and fix them independently.

The broader SaaS sector lost $285 billion in market value yesterday as Anthropic's new legal automation tools triggered panic about AI replacing entire software categories.

Legal research stocks like Thomson Reuters dropped 16% in what traders called "get me out" style selling.

DEEP DIVE ANALYSIS: SAM ALTMAN'S AI SUCCESSION PLAN AND THE AGI CLAIMS RESHAPING CORPORATE GOVERNANCE

Technical Deep Dive

Sam Altman's claim that OpenAI has "basically built AGI" represents either visionary confidence or reckless hype, depending on who you ask. The technical reality is nuanced. OpenAI's latest models demonstrate reasoning capabilities that can plan multi-step tasks, maintain context across complex workflows, and even self-correct errors.

The company's internal benchmarks show performance approaching human expert levels across diverse domains from coding to scientific research. However, the technical definition of AGI—artificial general intelligence—requires systems that can match human cognitive abilities across virtually all domains, learn new tasks with minimal examples, and demonstrate genuine understanding rather than pattern matching. Current models still struggle with novel situations, lack true common sense reasoning, and can't transfer knowledge the way humans do instinctively.

The succession plan to hand leadership to an AI model is technically fascinating but practically distant. While AI can now handle specific executive functions like analyzing quarterly reports or optimizing resource allocation, the judgment calls, ethical considerations, and stakeholder management required for CEO-level decisions remain firmly in human territory. Altman's framing suggests this is aspirational rather than imminent, but announcing it publicly shifts the Overton window on what's considered possible.

Financial Analysis

OpenAI's financial position makes Altman's bold claims both necessary and risky. The company is burning through billions annually while racing to justify a $300 billion valuation. Claiming AGI is "basically built" serves a strategic financial purpose—it reassures investors that the company is close to achieving its core mission, potentially unlocking transformative revenue streams.

The timing coincides with OpenAI's funding challenges. Reports indicate Nvidia's investment might land at $20 billion rather than the initially discussed higher figures, suggesting even deep-pocketed backers are getting cautious about valuations in the AI space. Altman needs to maintain the narrative that OpenAI is ahead of competitors like Anthropic and Google, justifying premium pricing and continued investment despite mounting losses.

Forbes reported Altman has stakes in over 500 companies, raising questions about focus and potential conflicts of interest. This sprawling portfolio suggests he's hedging his bets across the AI ecosystem rather than going all-in on OpenAI's success. For investors, this creates uncomfortable optics—if the CEO believes AGI is basically solved at OpenAI, why maintain such diversified holdings?

The AI succession plan, while technically years away, has immediate financial implications. It signals to the market that OpenAI views its AI as approaching CEO-level capabilities, which could justify higher product pricing and enterprise contracts. However, it also creates uncertainty about future governance and control structures that institutional investors typically demand clarity on.

Market Disruption

Altman's AGI claims arrive just as the broader market is experiencing an AI reality check. The $285 billion SaaS selloff triggered by Anthropic's legal automation tools shows investors are taking AI disruption threats seriously. If OpenAI truly has achieved AGI or something close to it, virtually every knowledge worker industry faces existential disruption within years rather than decades.

The competitive dynamics are shifting dramatically. Microsoft's Nadella publicly contradicting Altman's AGI claims while simultaneously being OpenAI's biggest partner and investor reveals fractures in what was supposed to be a unified front. Their "frenemies" characterization is particularly telling—Microsoft has its own AI ambitions and may be hedging against being too dependent on OpenAI.

Anthropic is emerging as the dark horse winner in this narrative. While OpenAI makes grand AGI proclamations, Anthropic is shipping practical tools that are actually replacing software categories today. Their integration into Apple's Xcode gives them distribution to millions of developers, potentially establishing Claude as the default AI coding assistant.

The market may reward execution over promises. Google, despite having more AI research talent than anyone, continues to struggle with commercialization. Their Gemini integration with Android shows promise, but they're perpetually six months behind in shipping consumer-facing features.

OpenAI's claim to have reached AGI first, even if exaggerated, maintains their narrative leadership while Google plays catch-up.

Cultural & Social Impact

The concept of handing a company to an AI CEO crosses a profound psychological threshold for society. It's one thing to have AI assistants or even autonomous agents handling specific tasks. It's entirely different to place an AI in humanity's ultimate decision-making role—the chief executive responsible for strategy, culture, and stakeholder interests.

Altman's framing reveals his underlying philosophy: if the goal is to build AGI that can run companies better than humans, OpenAI should be the first to actually do it. This creates a fascinating paradox. It's simultaneously radical transparency about OpenAI's mission and a potential PR disaster if it implies human CEOs will become obsolete faster than society is ready to accept.

The employee concerns about doing "too much too quickly" captured in the Forbes profile point to internal cultural tensions. OpenAI has transformed from a nonprofit research lab focused on safety into a commercial juggernaut racing to ship products. Long-time employees who joined for the mission may feel uncomfortable with the pace and direction.

High-profile departures of safety researchers over the past year support this narrative. For the broader public, Altman's claims feed both utopian and dystopian AI narratives. Optimists see AGI as solving humanity's greatest challenges from disease to climate change.

Pessimists worry about job displacement, loss of human agency, and existential risks. By claiming AGI is basically here, Altman forces society to confront these questions now rather than treating them as distant theoretical concerns.

Executive Action Plan

**For enterprise technology leaders:** Don't take the AGI claims at face value, but prepare for capabilities that approximate human expert performance across many domains within 18-24 months. Start mapping which knowledge worker functions in your organization could be automated or augmented by AI at that level. Build "AI readiness" into your workforce planning—not through layoffs, but by identifying where humans add irreplaceable value versus where AI could dramatically increase leverage.

Consider pilot programs with multiple AI providers rather than betting entirely on OpenAI, given the market volatility and competitive dynamics. **For startup founders and product leaders:** The window for AI-native disruption remains wide open despite OpenAI's claims of dominance. Anthropic's success with focused, vertical tools shows that execution on specific use cases beats general capabilities.

Identify knowledge worker workflows where AI can deliver 10x improvements today, not eventually. The market is rewarding companies shipping practical AI tools over those making AGI promises. Consider whether your product strategy assumes AI as a feature enhancement or as the core product—the latter is increasingly the only defensible position.

**For investors and board members:** Demand clarity on AI strategy beyond buzzwords. Ask management teams specific questions about which AI capabilities they're betting on, what happens if those capabilities arrive slower or faster than expected, and how they're measuring actual productivity improvements versus theoretical ones. The SaaS selloff shows the market is repricing software companies based on AI disruption risk—get ahead of this by stress-testing portfolio companies' defensibility.

For companies like OpenAI making extraordinary claims, require extraordinary evidence and alignment between leadership statements and actual roadmaps. The contradictions between Altman's AGI claims and Microsoft's pushback suggest due diligence on AI companies needs to go much deeper than typical tech investments.

Never Miss an Episode

Subscribe on your favorite podcast platform to get daily AI news and weekly strategic analysis.