World Models Reach GPT-2 Moment with New Interactive APIs

Episode Summary

TOP NEWS HEADLINES The AI world just got two major APIs that could change how we interact with digital environments. Odyssey launched Odyssey-2 Pro, streaming real-time interactive video at 720p t...

Full Transcript

TOP NEWS HEADLINES

The AI world just got two major APIs that could change how we interact with digital environments.

Odyssey launched Odyssey-2 Pro, streaming real-time interactive video at 720p that responds to text commands as you type them.

Meanwhile, World Labs released their World API that transforms any image into a navigable 3D environment in under five minutes.

Anthropic rolled out Claude for Excel, specifically targeting enterprise users who need help debugging complex spreadsheet formulas and updating financial models without breaking existing calculations.

In a rare public criticism, DeepMind CEO Demis Hassabis questioned OpenAI's decision to add advertising to ChatGPT, calling it surprising and potentially at odds with building user trust in AI assistants.

Academic integrity in AI research took a hit as investigators found 100 fake citations across 51 papers at NeurIPS 2025, the field's premier conference.

The irony of AI hallucinations plaguing AI research papers wasn't lost on anyone.

And new Census Bureau data revealed we've been massively undercounting AI adoption.

After fixing their survey methodology, business adoption nearly doubled to 17.6%, while real spending data from Ramp shows the actual number closer to 46.6%.

DEEP DIVE ANALYSIS: THE GPT-2 MOMENT FOR WORLD MODELS

Technical Deep Dive

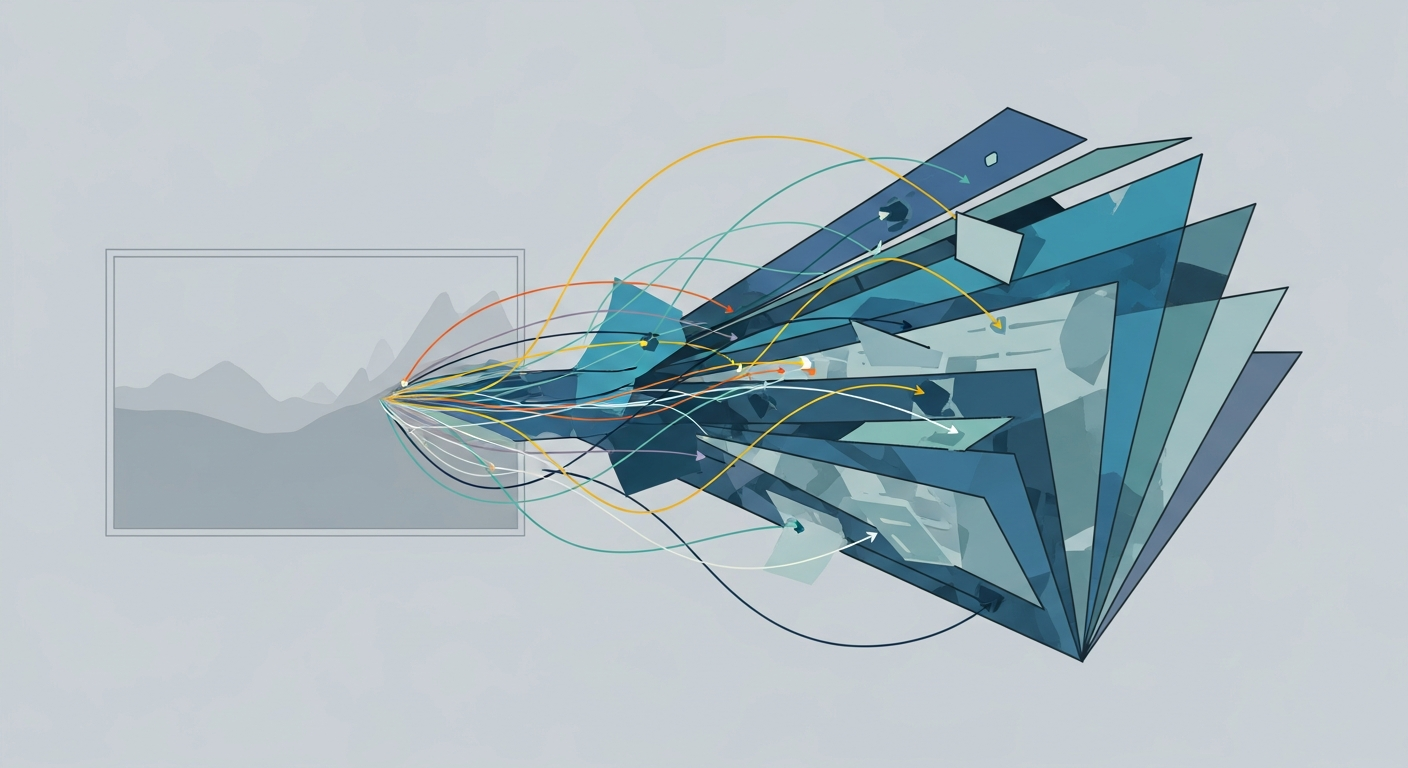

Let's talk about what just became possible. Odyssey-2 Pro and World Labs' API represent two different approaches to the same fundamental breakthrough: generating interactive, explorable environments on demand. Odyssey's approach is temporal.

You type "a laughing baby" and their world model generates continuous video you can interact with in real-time. Send another command like "a kitten appears" mid-stream, and the simulation updates instantly. The model predicts how the world evolves frame-by-frame, learning physics and behaviors from massive video datasets.

Right now it runs for minutes, but hours and full days are coming next. World Labs takes a spatial approach. Upload any image, video, or text prompt and their model, called Marble, generates a complete 3D environment with layout, depth, and lighting in about five minutes.

You can walk through it in a browser and even export as Gaussian splats or meshes for use in traditional 3D pipelines. The technical leap here isn't just about rendering pretty pictures. These models understand causality and physics.

They've learned how objects interact, how lighting changes when you move, how materials respond to forces. That's why Odyssey calls this their "GPT-2 moment." Just as language models learned the structure of language from text, world models are learning the structure of reality from video.

Financial Analysis

The pricing models signal serious commercial intent. Both APIs are priced for experimentation and rapid adoption. Odyssey offers JavaScript and Python SDKs with mobile versions coming, while World Labs integrates with standard 3D pipelines that studios already use.

Look at the early adopters to understand the financial trajectory. Escape.ai is turning 2D films into explorable 3D spaces.

Interior AI visualizes renovations instantly for paying customers. xFigura converts architectural sketches into walkable presentations, compressing weeks of 3D modeling work into minutes. The robotics angle might be the biggest financial opportunity.

Generating thousands of training environments from a few images instead of manually building each one could slash robotics development costs by orders of magnitude. The NVIDIA Isaac Sim integration suggests NVIDIA sees this clearly. Gaming studios spend millions on environment artists and months on level design.

If a world model can generate explorable environments from prompts or concept art, that's not just cost savings, it's timeline compression. Ship games faster with smaller teams. Medical training is another massive market.

Traditional medical simulation costs hundreds of thousands for physical setups. Generating operating rooms procedurally for student practice at fraction of the cost opens entirely new business models for medical education. The infrastructure play matters too.

These models require serious compute, which means cloud providers will see increased revenue. But more importantly, whoever controls the best world models controls a new layer of the computing stack, similar to how OpenAI positioned itself with language models.

Market Disruption

Let's be clear about who's threatened. Traditional 3D modeling software companies like Autodesk should be paying very close attention. When you can generate environments from text or images, the value proposition of complex modeling tools starts to erode.

Not immediately, but the trajectory is obvious. Game engines like Unity and Unreal won't disappear, but their role shifts. Instead of being environments where artists painstakingly place every asset, they become environments where AI-generated worlds get refined and polished.

The skill requirements for game development fundamentally change. The architectural visualization market gets compressed. Firms charging tens of thousands for 3D walkthroughs of planned buildings face competition from tools that generate those walkthroughs in minutes.

The value moves from technical execution to creative direction and client relationships. Stock footage and 3D asset marketplaces face existential questions. Why buy a pre-made 3D office environment when you can generate exactly what you need?

Companies like TurboSquid and Shutterstock need new business models. But here's the interesting part: this also creates entirely new markets. Escape.

ai's model of turning films into explorable spaces didn't exist before. Procedural emergency response training at scale wasn't economically viable. Generated worlds for robotics training opens possibilities that weren't even considered.

The companies best positioned are those already sitting on massive video datasets and compute infrastructure. Google, Meta, and ByteDance have both. Startups like Odyssey and World Labs have first-mover advantage but will need continued funding to compete with hyperscaler resources.

Cultural & Social Impact

We're about to see a fundamental shift in how people interact with media. Watching a movie becomes exploring a movie. Viewing a photo becomes stepping into that scene.

This isn't incremental, it's categorical. Education transforms when students can step into historical events, walk through cellular biology, or practice emergency responses in generated scenarios. The difference between reading about the Roman Forum and walking through a generated reconstruction is the difference between abstract knowledge and embodied understanding.

But let's talk about the concerning aspects. We're already drowning in AI-generated content. Interactive AI slop could be far more addictive than static slop.

Imagine TikTok but every video is a world you can step into and modify. The attention capture potential is staggering. Authenticity becomes even more fraught.

When any image can become an explorable 3D space, distinguishing between captured reality and generated fantasy gets harder. We'll need new literacies around world model outputs just as we're still figuring out how to handle deepfakes. The democratization angle matters.

A solo developer can now create game worlds that would have required a team of 20 environment artists. That's genuinely empowering. But it also floods every creative field with more output, making discovery and curation more important than creation itself.

Social interaction in virtual spaces changes too. Instead of meeting in pre-built environments, groups could generate custom spaces on the fly. "Let's meet in a cozy coffee shop" becomes "let me generate us a cozy coffee shop.

" The implications for virtual collaboration and remote work are significant.

Executive Action Plan

If you're a business leader, here's what you need to do now. First, run experiments immediately. Don't wait for perfect use cases.

Odyssey offers free trials and their APIs are accessible. Spend a week having your team generate environments relevant to your business. What could you do with instant 3D visualization of products?

How might training scenarios change? You can't strategize about a technology you haven't touched. Second, audit your 3D and visual content pipeline.

Where are you spending time and money on environment creation, architectural visualization, or training simulations? Those are your immediate opportunities. Calculate what 10x speed improvements and 90% cost reductions would mean for your business model.

Then build pilots around the highest-value targets. Third, consider the talent implications. You still need creative direction, you still need people who understand composition and user experience.

But the execution skills are shifting from technical modeling to prompt engineering and curation. Start cross-training your 3D artists and designers now. The ones who adapt fastest will deliver outsized value.

The companies that treat this as "interesting technology to monitor" will find themselves outpaced by competitors who treat it as infrastructure to build on today. This is the GPT-2 moment. The ChatGPT moment for world models is coming faster than you think.

Never Miss an Episode

Subscribe on your favorite podcast platform to get daily AI news and weekly strategic analysis.