Kyutai's Pocket TTS Disrupts Billion-Dollar Cloud Voice Market

Episode Summary

TOP NEWS HEADLINES Anthropic just expanded its Labs division, hiring builders to create experimental products like Claude Code and MCP under Instagram co-founder Mike Krieger. The team is pushing ...

Full Transcript

TOP NEWS HEADLINES

Anthropic just expanded its Labs division, hiring builders to create experimental products like Claude Code and MCP under Instagram co-founder Mike Krieger.

The team is pushing fast on new prototypes that could define how we interact with AI daily.

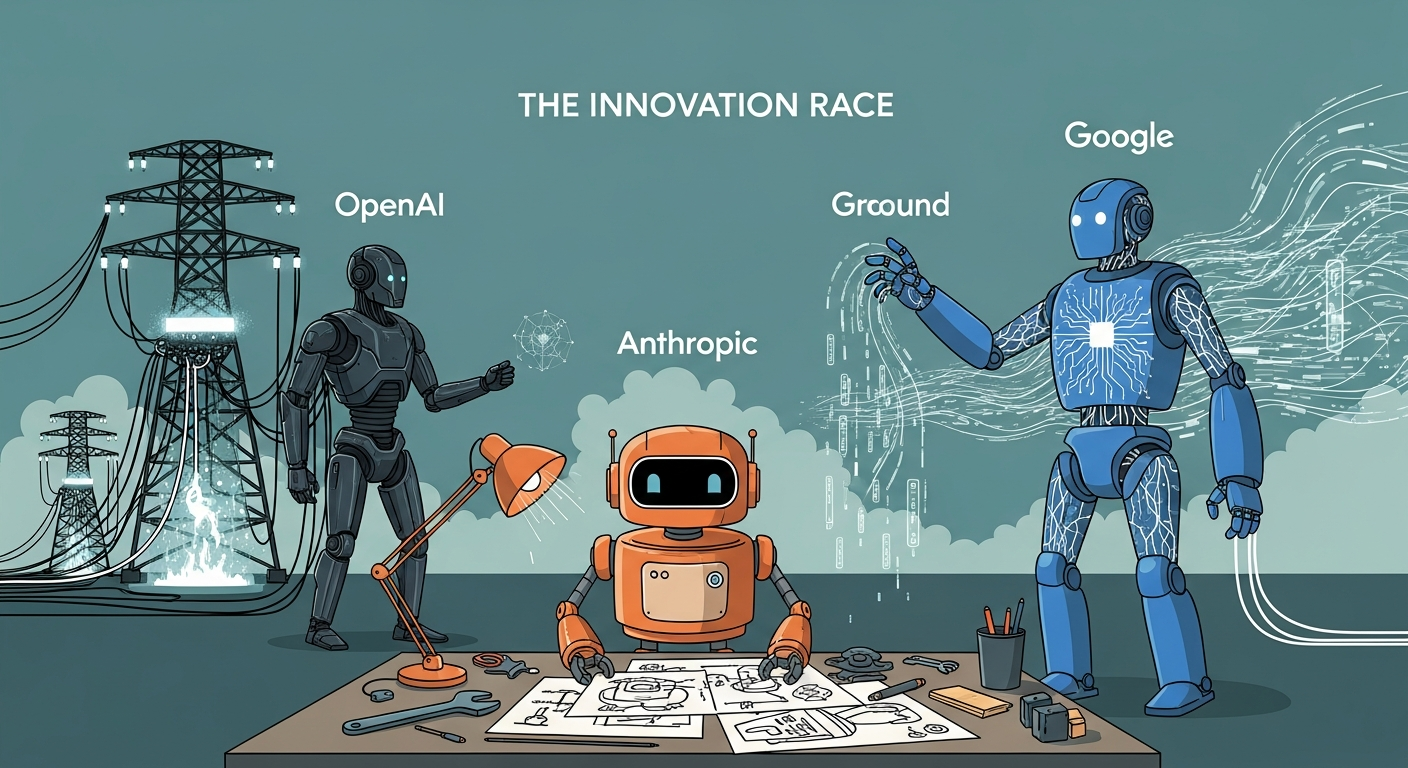

OpenAI signed a massive $10 billion deal with Cerebras to deploy 750 megawatts of compute through 2028.

This is the largest high-speed AI inference deployment ever announced, specifically designed to make GPT responses faster and more accessible at scale.

Following yesterday's coverage of Google's Gemini integration plans, the company officially launched Personal Intelligence beta in the US.

The feature now connects Gmail, Photos, YouTube, and Search data, allowing Gemini to reason across your personal information without you specifying which app to search.

Three of Thinking Machines Lab's six co-founders just returned to OpenAI, including former research scientists Barret Zoph and Luke Metz.

The startup, founded by ex-OpenAI CTO Mira Murati just months ago, is already losing key talent back to its parent company.

Skild AI raised $1.4 billion to build what they're calling a universal AI brain for robots.

The system trains on human videos and simulation data, then works across any robot form factor, from warehouse bots to humanoid assistants.

DEEP DIVE ANALYSIS: Kyutai's Pocket TTS Brings Production-Quality Voice Cloning to Local Hardware

Technical Deep Dive

Most voice cloning technology requires expensive cloud infrastructure and GPU clusters to generate natural-sounding speech. Kyutai just released Pocket TTS, a text-to-speech model so efficient it runs faster than real-time on a standard laptop CPU with no dedicated graphics card required. The breakthrough comes from a new framework called Continuous Audio Language Models, or CALM.

Traditional TTS systems convert speech into discrete tokens, process those tokens, then convert back to audio. This tokenization step creates a computational bottleneck that demands GPU acceleration. CALM predicts audio waveforms directly, eliminating that conversion overhead entirely.

The model weighs just 100 million parameters, roughly seven times smaller than competing systems, yet it achieves the lowest Word Error Rate in its class at 1.84%. Feed it five seconds of someone's voice, and it captures not just tone and accent, but emotional inflection, room acoustics, and even microphone characteristics.

The entire system is open-source under MIT license, with full training code and 88,000 hours of public training data available for inspection. This isn't just incremental improvement. It's a fundamental rethinking of how voice synthesis works, optimized from the ground up for efficiency rather than cloud-scale power.

Financial Analysis

ElevenLabs just hit $330 million in annual recurring revenue selling cloud-based voice cloning. That business model assumes developers will pay subscription fees because they have no alternative. Pocket TTS eliminates that assumption overnight.

For independent developers and small studios, the math is straightforward: a $99 monthly ElevenLabs subscription versus free local processing. For a solo game developer adding 50 character voices, that's $1,200 annually saved with better privacy and no API rate limits. The enterprise impact runs deeper.

Medical transcription, legal documentation, and corporate communications all involve sensitive audio that companies prefer not to send to third-party servers. Until now, that preference meant either accepting lower quality local models or paying premium prices for on-premise deployments. Kyutai just made enterprise-grade voice synthesis free and fully private.

This follows a pattern we've seen across AI infrastructure: once a capability moves from cloud-only to edge-capable, the economic center of gravity shifts. Companies that built moats around cloud APIs suddenly face competitors running equivalent quality on customer hardware. The voice AI market is about to experience that same compression, with billions in cloud revenue potentially moving to on-device processing.

Market Disruption

Voice AI has been one of the few AI categories where cloud providers maintained clear advantages. The compute requirements created natural barriers to entry and recurring revenue streams. Pocket TTS breaks both.

OpenAI, Google, and Amazon all offer voice synthesis as part of their cloud platforms, charging per character or per request. Anthropic just integrated voice into Claude. These companies now face a three-way pressure: match Pocket TTS quality while running locally, accept lower margins on voice features, or abandon voice as a distinct revenue stream.

For hardware makers, this opens new product categories. A smartphone manufacturer can now add unlimited voice cloning without cloud dependency. A smart speaker company can offer voice customization without ongoing server costs.

Medical device makers can build dictation systems that never upload patient data. The competitive dynamic shifts most dramatically for specialized voice AI startups. Companies that raised funding based on proprietary voice models and cloud infrastructure just lost their technical moat.

Unless they've built substantial value in data quality, enterprise integrations, or domain-specific fine-tuning, they're now competing with free, open-source alternatives that run better on local hardware. We're also seeing early signs of a new competitive front: voice agents that need to run continuously without cloud latency. Customer service bots, in-car assistants, and voice-controlled interfaces all benefit from instant local response.

Pocket TTS makes that economically viable for the first time.

Cultural & Social Impact

Voice is identity. The technology to clone someone's voice has existed for years, but it required sending recordings to cloud servers operated by companies with varying privacy commitments and unpredictable business models. That created a trust problem that limited adoption.

Pocket TTS solves this by keeping voice data entirely local. For people with degenerative conditions like ALS, this means they can preserve their voice privately, maintaining control over their most personal form of expression. No third party ever sees or stores their audio.

That shift from cloud dependence to local control fundamentally changes who can benefit from voice preservation technology. The accessibility implications extend beyond medical use. Language learners can create pronunciation guides in their own voice.

Content creators can generate narration for long-form work without hiring voice actors or negotiating usage rights. Educators can build personalized learning materials that speak in familiar voices. But this same accessibility creates new risks.

Scammers have already used voice cloning to impersonate family members in emergency scams. Making that technology trivially easy and free to run locally removes the last practical barriers to voice-based fraud. The technology itself doesn't care whether you're preserving your own voice or impersonating someone else.

We're entering a period where voice can no longer serve as authentication. If anyone with a laptop can clone any voice from five seconds of audio, systems that rely on voice recognition for security become fundamentally broken.

Executive Action Plan

First, audit any systems in your organization that use voice as an authentication factor. Voice biometrics, phone support verification, and voice-controlled access systems all need alternative authentication methods. Implement multi-factor authentication that doesn't rely on voice matching before this becomes an active security problem.

Second, evaluate your current voice AI vendors. If you're paying for cloud-based voice synthesis, run proof-of-concept tests with Pocket TTS to determine whether you can move processing in-house. For many applications, especially those involving sensitive data or high volume, local processing will now deliver better economics and privacy guarantees.

But don't switch until you've validated quality, measured actual latency in your specific environment, and confirmed your team can support the deployment. Third, consider new product opportunities this enables. If voice generation was previously too expensive or technically complex for your roadmap, it's now trivially cheap and easy to implement.

Can you add voice narration to existing content? Build voice interfaces where they weren't economically viable before? Create personalized voice experiences that would have required cloud infrastructure you couldn't justify?

The constraint just lifted.

Never Miss an Episode

Subscribe on your favorite podcast platform to get daily AI news and weekly strategic analysis.