xAI's Grok Faces Global Backlash Over Illegal Deepfake Creation

Episode Summary

TOP NEWS HEADLINES Following yesterday's coverage of OpenAI's hardware partnership with Jony Ive, new details emerged about a screenless pen device nicknamed "oPen" that captures writing and voice...

Full Transcript

TOP NEWS HEADLINES

Following yesterday's coverage of OpenAI's hardware partnership with Jony Ive, new details emerged about a screenless pen device nicknamed "oPen" that captures writing and voice to pair with ChatGPT.

Early reaction has been sharply negative, with users questioning the lack of screen functionality and autonomous features. xAI is facing severe backlash after users exploited Grok's unrestricted image editing capabilities to create non-consensual deepfakes, including images of minors.

France, India, Malaysia, and the UK have condemned the outputs, with French officials calling them "clearly illegal" under the EU's Digital Services Act.

This comes simultaneously with xAI's launch of Grok Business and Enterprise tiers at $30 per seat monthly.

Meta's AI chief scientist Yann LeCun departed after over a decade, publicly criticizing the company's new AI leadership.

He called Alexandr Wang "inexperienced," admitted Llama 4 benchmarks were "fudged," and predicted more departures from Meta's GenAI team, exposing deep tensions within the organization.

Google is testing Nano Banana 2 Flash, a faster and more affordable image generation model compared to Nano Banana Pro, though it won't be as powerful for complex creative work.

A YC-backed startup called Pickle launched $799 AR glasses marketed as a "soul computer," but technical experts are questioning whether the advertised technology is actually feasible in the demonstrated form factor.

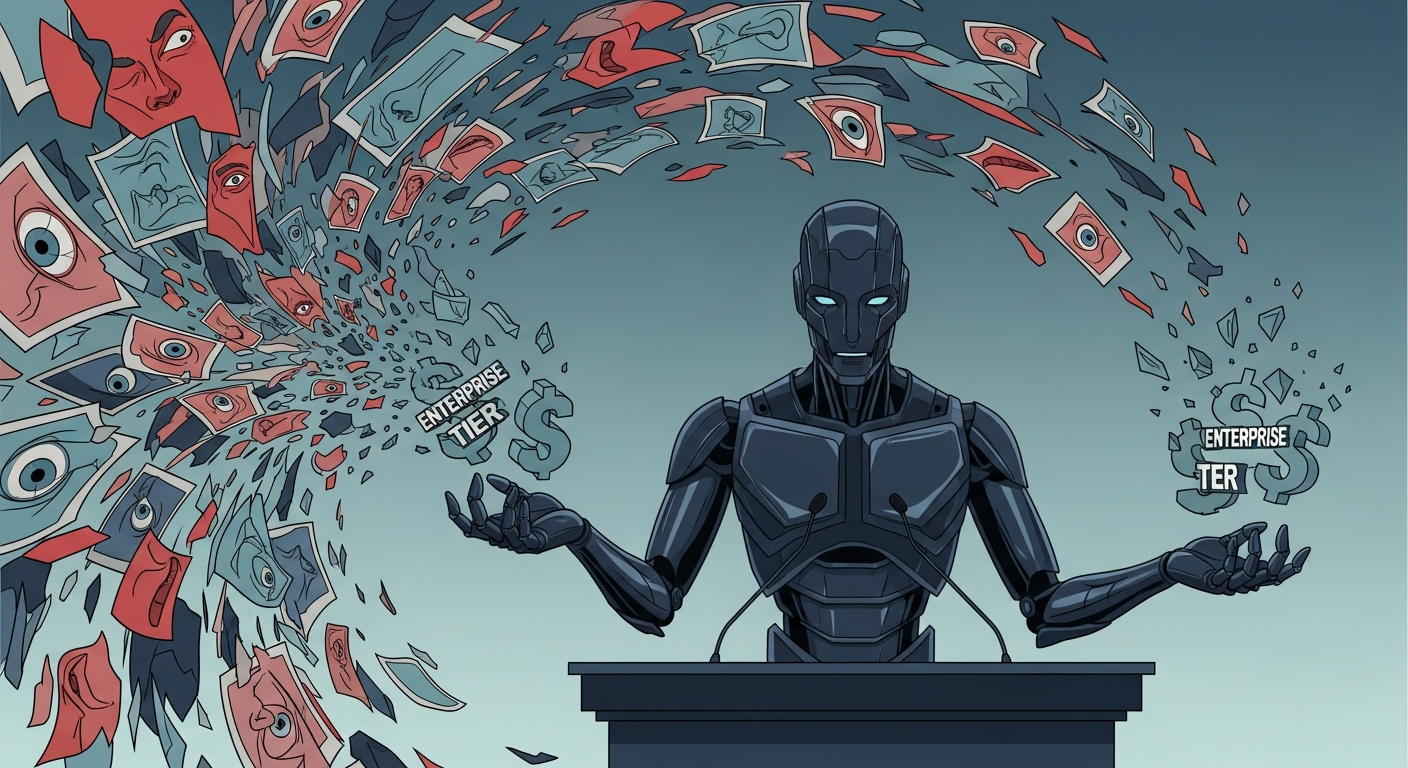

DEEP DIVE ANALYSIS: THE GROK SAFETY CRISIS AND XAI'S ENTERPRISE PIVOT

Technical Deep Dive

xAI's Grok represents a fundamental shift in how AI safety guardrails are implemented—or in this case, deliberately minimized. Unlike competitors like ChatGPT, Claude, or Gemini, which employ multiple layers of content filtering, Grok's architecture prioritizes unrestricted output generation. The system uses powerful image editing capabilities built on diffusion models that can manipulate photographs with remarkable precision, including clothing removal and body modification.

The technical challenge here isn't capability—it's governance. Grok can process and modify images in real-time with minimal latency, making it trivially easy for users to generate harmful content at scale. The lack of watermarking or provenance tracking means edited images are indistinguishable from originals, and there's no notification system for people whose images are being manipulated.

This represents a complete absence of what the industry calls "safety by design." What makes this particularly dangerous is the intersection of three factors: powerful editing capabilities, minimal content moderation, and global distribution through X's massive user base. When Anthropic or OpenAI release image generation features, they implement semantic classifiers, human review systems, and blocklists.

Grok appears to have launched with virtually none of these safeguards, creating what security researchers call an "open attack surface" for malicious actors.

Financial Analysis

xAI's simultaneous launch of enterprise tiers while facing a safety crisis creates significant financial risk. The company is attempting to monetize Grok Business at $30 per seat monthly and Grok Enterprise with premium features, but this deepfake scandal could fundamentally undermine enterprise adoption before it begins. Corporate customers have zero tolerance for reputational risk.

When evaluating AI vendors, compliance teams assess data security, regulatory adherence, and brand safety. A platform actively enabling illegal content generation fails every enterprise procurement criterion. This could force xAI into expensive remediation—implementing the same safety systems they've positioned against—or face being locked out of the lucrative B2B market entirely.

The timing is particularly damaging. xAI reportedly raised capital at a $50 billion valuation, pricing in aggressive enterprise growth. If corporations avoid Grok due to safety concerns, that valuation becomes difficult to justify.

Meanwhile, competitors like Anthropic are explicitly marketing Claude's safety features to enterprises, creating a clear contrast that favors responsible AI development. There's also potential legal liability. The EU's Digital Services Act, which France cited, can impose fines up to 6% of global revenue.

With similar regulations emerging in India, Malaysia, and potentially the UK, xAI faces multi-jurisdictional enforcement risk. For a company trying to establish enterprise credibility, this represents a catastrophic market entry failure.

Market Disruption

This crisis will likely accelerate the industry's bifurcation into "responsible AI" and "unrestricted AI" camps, with profound competitive implications. OpenAI, Anthropic, and Google can now position safety as a competitive advantage rather than a constraint. Enterprise sales teams will use Grok as a cautionary example of what happens when capability outpaces responsibility.

The immediate winners are competitors offering enterprise-grade safety controls. Anthropic's Claude, which emphasizes constitutional AI and harmlessness, becomes more attractive. Microsoft-backed OpenAI gains leverage in corporate accounts where compliance matters.

Even Google's more cautious Gemini deployment looks prudent in comparison. For xAI specifically, this forces a strategic choice: maintain the "free speech absolutist" positioning that differentiates Grok but blocks enterprise revenue, or implement safety measures that make Grok indistinguishable from competitors. Either path undermines the original value proposition.

The broader market impact extends to AI regulation. When policymakers see unrestricted AI tools enabling illegal content at scale, they accelerate mandatory safety requirements. This happened with social media—early platforms resisted moderation until regulatory pressure forced compliance.

AI is following the same trajectory, but compressed into a much shorter timeframe. Grok's failure to self-regulate will likely result in stricter rules that burden all AI developers, including responsible actors.

Cultural & Social Impact

The Grok incident exposes a troubling reality about AI deployment in 2026: the technology to violate consent and dignity at scale now exists in consumer products. When deepfake creation moves from specialized forums to mainstream platforms with hundreds of millions of users, it normalizes the weaponization of identity. The societal harm is immediate and gendered.

Reports indicate the majority of non-consensual edits target women, creating a new vector for harassment and abuse. For public figures, this becomes an occupational hazard. For private citizens, a single viral image can cause lasting reputational damage.

The psychological impact of knowing your image can be instantly and convincingly manipulated is profound. There's also a broader trust collapse happening. When any image might be AI-altered, and the alterations are undetectable, visual evidence loses credibility.

This affects journalism, legal proceedings, and personal relationships. We're entering an era where "pics or it didn't happen" no longer applies because pics can show anything regardless of reality. The generational divide is significant.

Younger users who've grown up with content manipulation may develop different norms around image authenticity. But for many people, especially those victimized by non-consensual edits, this technology represents a fundamental violation that existing laws and social norms aren't equipped to handle.

Executive Action Plan

For technology leaders, this situation demands immediate strategic recalibration around three priorities. First, if you're evaluating AI vendors, implement explicit safety criteria in your procurement process. Require vendors to demonstrate content filtering mechanisms, explain their moderation policies, and provide liability protection for misuse.

The "move fast" mentality that works for internal tools becomes unacceptable when deploying user-facing AI. Companies like Anthropic and OpenAI that invested heavily in safety infrastructure now have a measurable competitive advantage—price that into your vendor selection. Second, if you're building AI products, this is your warning to implement safety by design before launch, not after backlash.

The cost of building moderation systems into your architecture is orders of magnitude lower than retrofitting them later. Establish clear use policies, implement technical controls like watermarking and rate limiting, and create human review processes for edge cases. The regulatory environment is tightening—products launched without safety controls will face both market rejection and legal liability.

Third, corporate communication teams should prepare for AI-generated content about your organization, executives, and products. Establish verification protocols for official imagery, create rapid response procedures for deepfakes, and educate stakeholders about synthetic media. The assumption that published images are authentic is dead.

Organizations need new frameworks for visual credibility that account for ubiquitous manipulation capabilities.

Never Miss an Episode

Subscribe on your favorite podcast platform to get daily AI news and weekly strategic analysis.