Google Ships 60 AI Products in 2025, Escalating Platform War

Episode Summary

TOP NEWS HEADLINES Let's jump into today's biggest AI stories. OpenAI is admitting something critical: their AI browser, Atlas, faces persistent prompt injection vulnerabilities that may never be ...

Full Transcript

TOP NEWS HEADLINES

OpenAI is admitting something critical: their AI browser, Atlas, faces persistent prompt injection vulnerabilities that may never be fully solved.

These are attacks where malicious instructions embedded in web content can manipulate agent behavior.

Even with improved mitigation techniques, the company acknowledges this remains an unsolved problem as agent capabilities expand across the open web.

OpenAI rolled out "Your Year with ChatGPT" - basically their version of Spotify Wrapped.

It shows you how many messages, chats, images, and even em-dashes you generated this year, plus assigns you an "archetype" persona based on your usage patterns.

The foldable iPhone is taking shape, and it's going wide.

According to The Information, Apple's 2026 foldable will have an aspect ratio similar to their largest iPads in landscape mode - making it wider than Samsung's Galaxy Z Fold or Google's Pixel Fold.

This could help Apple instantly differentiate in a crowded foldable market.

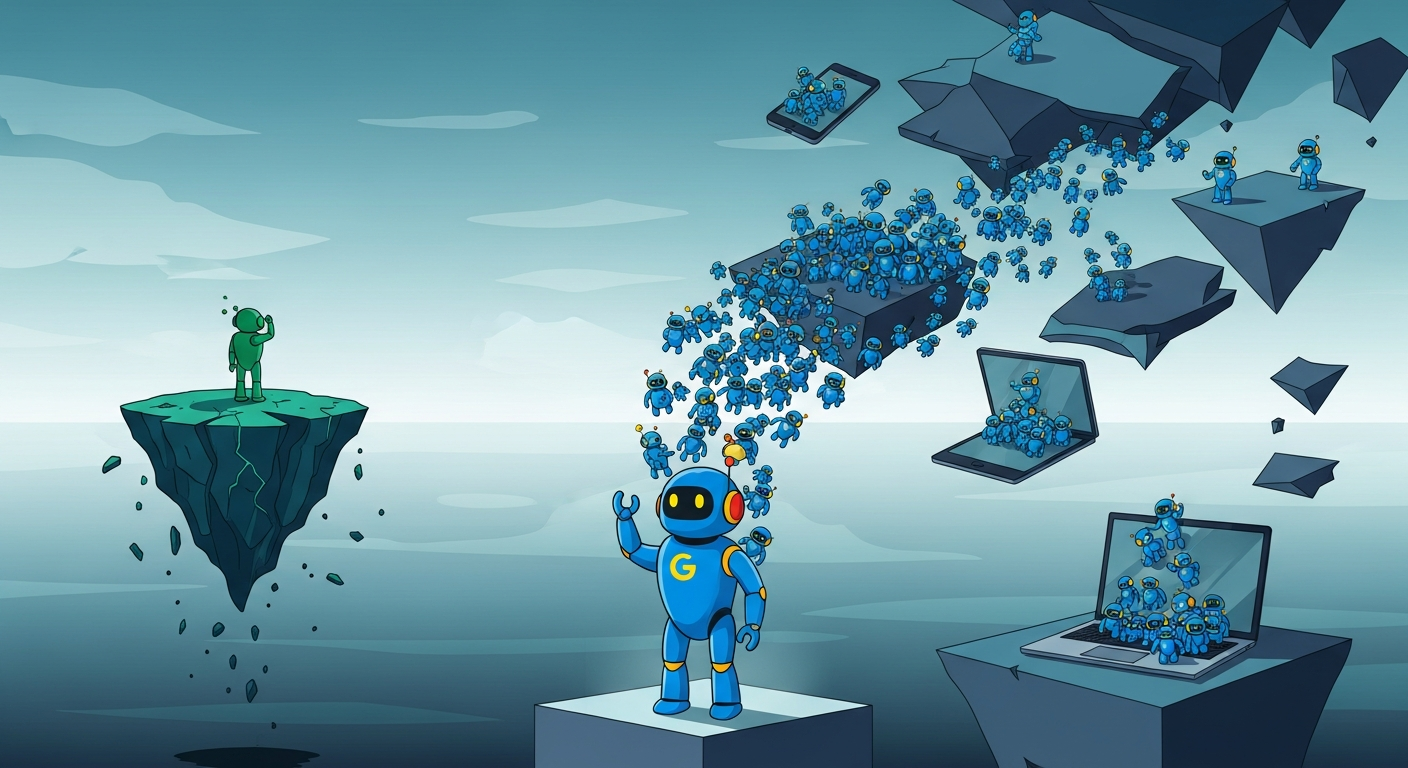

Google just dropped their year-end flex: 60 major AI releases in 2025.

That's more than one per week, including Gemini 2.5, Gemini 3, their viral Nano Banana image editor, and AI Mode in Search.

They're making it clear - 2025 was Google's revenge tour year against OpenAI.

And in hardware news, scientists found a way to "reboot" vision in adults with lazy eye.

A mouse study showed that briefly anesthetizing the retina of an amblyopic eye for just a few days can restore the brain's visual responses.

The treatment seems to reset how the brain engages with specific neurons, though researchers still don't fully understand why.

DEEP DIVE ANALYSIS

Let's dig into Google's massive 2025 AI push - because this isn't just about product velocity. This is about a fundamental shift in the AI competitive landscape.

Technical Deep Dive

Google shipped 60 AI products in 2025, but the real story is the architectural strategy behind them. They're not just building models - they're building an integrated AI operating system. Gemini 2.

5 in March established their competitive foundation, then Gemini 3 in November represented what they called a "new era of intelligence." But look at how they deployed it: Nano Banana went directly into Search, Photos, and NotebookLM. AI Mode became a native part of Search itself.

Flow brought video generation to consumers. Chrome got a complete AI makeover. This is a multi-surface strategy.

Every Google product is becoming an AI surface. They made Gemini 2.0 completely free, which is aggressive loss-leader economics - they're willing to subsidize model access to own the interface layer.

Search Live enables real-time AI conversations, fundamentally changing how users interact with information retrieval. For developers, they shipped Gemini CLI, Jules, and Antigravity. This isn't developer relations - it's infrastructure capture.

If developers build on Google's tooling, they're locked into Google's ecosystem. The technical bet is clear: own every layer from silicon to interface. Their custom TPU chips, their models, their distribution channels, their developer tools.

It's vertical integration at massive scale, and the pace suggests they're treating 2026 as the year they need to cement platform dominance before the window closes.

Financial Analysis

The economics here are fascinating and slightly terrifying for competitors. Google is spending billions on compute while simultaneously giving away premium AI features. They can afford this because they're subsidizing AI with search advertising revenue of over $200 billion annually.

That's a war chest no pure AI company can match. Making Gemini 2.0 free is a direct attack on OpenAI's primary revenue model.

OpenAI charges $20 per month for ChatGPT Plus, $200 per month for Pro. Google is essentially saying "we'll provide comparable capability for zero dollars because we monetize through advertising and cloud services." That's not competition - that's predatory pricing enabled by parent company cash flow.

The Nano Banana viral moment is particularly clever financially. It cost Google relatively little to develop and deploy, but generated massive organic marketing value. When millions of users try a feature in Search or Photos, Google collects behavioral data worth far more than any direct monetization.

They're trading immediate revenue for long-term moat construction. For investors, this signals that the AI platform war will be won by companies with massive existing distribution and alternative revenue streams. Pure-play AI companies face a profitability crisis: they need to charge enough to cover compute costs, but they're competing against companies willing to operate AI at a loss.

Anthropic, despite raising billions, doesn't have Google's ability to subsidize indefinitely. Neither does OpenAI, unless Microsoft increases their subsidy commitment.

Market Disruption

This 60-product blitz is specifically designed to prevent OpenAI from becoming the default AI interface. And it's working. Consumer habits are already consolidating around ChatGPT, but Google has something OpenAI doesn't: mandatory touchpoints.

Billions of people use Google Search daily. Hundreds of millions use Chrome. Gmail has 1.

8 billion users. YouTube exceeds 2 billion monthly users. Each of these is now an AI entry point.

You don't choose to use Google AI - you encounter it automatically during your existing workflow. That's distribution advantage that no startup can replicate. Andreessen Horowitz's consumer AI data shows fewer than 10% of ChatGPT users touch another assistant.

But what happens when Google AI isn't "another assistant" - it's already inside the tools you use? For the broader AI market, this creates a squeeze. On one end, you have Google integrating AI into everything.

On the other end, you have OpenAI building ChatGPT into a super-app with 800-900 million weekly users. Where does that leave everyone else? Anthropic, despite shipping Claude Code and browser capabilities, has maybe 15% of ChatGPT's user base.

Startups face an impossible question: how do you compete when the big players control both the models and the distribution? The answer appears to be vertical specialization. Focused tools like Cursor for coding, Descript for video editing, or Albert Invent for chemistry can succeed by being 10x better at one specific thing.

But horizontal AI platforms? That market is rapidly closing. We're watching the end of the "hundreds of AI companies will flourish" era and entering "three platforms plus vertical specialists" reality.

Cultural & Social Impact

Here's what's culturally significant: Google is normalizing AI as infrastructure rather than tool. When AI is embedded in Search, you stop thinking "I'm using AI" and start just... searching.

That's the behavior change Google is engineering. They want AI to fade into the background of computing itself. This has profound implications for how society adapts to AI.

Instead of a dramatic "now we're using AI" moment, we get gradual acclimatization. You're editing photos with Nano Banana without thinking about the model architecture behind it. You're getting AI-enhanced search results without explicitly choosing to use AI.

This reduces resistance but also reduces awareness. The flip side is the transparency problem. When AI becomes invisible infrastructure, how do users understand what they're interacting with?

Google Search with AI Mode doesn't clearly distinguish between traditional results and generated content. That's not a bug - it's a design choice that prioritizes seamless experience over informed consent. Users are being trained to trust AI outputs without necessarily understanding their provenance or limitations.

For younger users especially, this is their computing baseline. They're not comparing AI-enabled products to pre-AI versions - this is just how computers work. That shifts cultural expectations around what technology should do.

The question "can a computer understand this?" becomes "why isn't the computer understanding this better?" It's a fundamental reframing of the human-computer relationship.

The bigger cultural question is about platform consolidation in society's information layer. If Google succeeds in making Gemini the default AI interface for billions of users, they control the lens through which people access information. That's an extraordinary amount of cultural power concentrated in one company's product decisions and model training choices.

Executive Action Plan

If you're running a business, here's what this Google push means for your strategy. First, assume AI will be free at the commodity level. Don't build business models that depend on charging for basic AI access.

Google's willingness to give away Gemini 2.0 signals that frontier model access is becoming a loss leader. Your competitive advantage must come from data, workflow integration, or specialized capability - not from model access itself.

If your startup's pitch is "we provide easy access to AI models," you're already obsolete. The new pitch needs to be "we provide AI that understands your specific domain, trained on your specific data, integrated into your specific workflow." Second, pick your platform dependency carefully.

If you're building on Google's tools, you gain distribution but lose pricing power. They can undercut you anytime they want. If you're building on OpenAI, you have more pricing independence but less distribution reach.

The safest play is model-agnostic architecture - build your product to work with multiple providers so you can't be held hostage by platform decisions. This adds engineering complexity but provides strategic optionality. Third, focus obsessively on the interface layer.

The technical deep dive showed that Google is pushing AI into every surface they control. You can't compete on model quality or compute scale. But you can compete on user experience, workflow design, and domain expertise.

The companies winning in 2026 will be the ones who make AI feel invisible while delivering massive productivity gains. That's a design problem, not a model problem. Invest in UX research, workflow automation, and reducing cognitive overhead for users.

The war for AI dominance will be won or lost at the interface layer, not the model layer.

Never Miss an Episode

Subscribe on your favorite podcast platform to get daily AI news and weekly strategic analysis.