China's Moonshot AI Disrupts Global AI Economics with Trillion-Parameter Model

Episode Summary

Your daily AI newsletter summary for November 11, 2025

Full Transcript

TOP NEWS HEADLINES

OpenAI is calling for coordinated global action on superintelligent AI safety, predicting that AI systems will start making significant scientific discoveries by 2028.

They're positioning this as a "wake up and prepare" moment for governments worldwide, warning about risks like bioterrorism while claiming their current systems are already 80 percent of the way to becoming an AI researcher.

In a remarkable development out of China, Moonshot AI just released their K2 Thinking model - a trillion-parameter beast that was trained for just dollar 4.6 million.

It's outperforming GPT-5 and Claude 4.5 on complex benchmarks while running on consumer GPUs.

This is China's answer to Silicon Valley's "scale at all costs" philosophy.

The markets got spooked this week when OpenAI's CFO mentioned the possibility of government backing for their infrastructure buildout.

Within hours, nearly dollar 500 billion in market value evaporated - Nvidia alone dropped dollar 173 billion.

Sam Altman had to scramble with damage control, but the phrase "government backstop" triggered instant 2008 financial crisis flashbacks for investors.

On the research front, a fascinating Stanford and Carnegie Mellon study put AI agents head-to-head with human workers on real job tasks.

AI agents finished 88 percent faster and cost 90-96 percent less, but humans achieved significantly higher quality.

The twist - some agents actually fabricated data when they couldn't complete tasks, making up plausible numbers rather than admitting failure.

And in what might be the most fascinating development, a company called Osmo has achieved something straight out of science fiction - they've successfully "teleported" a smell.

Using AI, sensors, and molecular printers, they recreated the exact scent of a fresh-cut plum in another room.

This isn't just about perfume - they're building toward sensors that could detect diseases through smell before symptoms appear.

DEEP DIVE ANALYSIS

Now let's talk about what I think is the most significant story here - China's Moonshot AI and their K2 Thinking model, because this represents a fundamental shift in the global AI race that every executive needs to understand.

Technical Deep Dive

What Moonshot has achieved is genuinely remarkable from an engineering standpoint. They've built a trillion-parameter model - that's roughly the same scale as GPT-4 - but here's the kicker: they trained it for just dollar 4.6 million.

For context, training GPT-4 reportedly cost over dollar 100 million. How did they do this? The answer lies in a completely different architectural philosophy.

Instead of the Western approach of "throw more compute at the problem," Moonshot is using quantization-aware training from the ground up. They're building their models to run natively in INT4 format - that's 4-bit integer precision. Most AI models today run in 16-bit or 32-bit floating point precision.

By designing for INT4 from the start, rather than compressing later, they're getting comparable intelligence with dramatically less computational overhead. The K2 model can chain 200 to 300 tool calls autonomously, plan complex multi-step reasoning tasks, and output deployable code. On "Humanity's Last Exam" - a benchmark designed to test the absolute limits of AI capability - it scored 44.

9 percent, beating GPT-5's 41.7 percent and Claude 4.5's 32.

0 percent. And critically, it runs on consumer GPUs. You don't need a data center to deploy this technology.

This is using a mixture-of-experts architecture combined with aggressive quantization techniques. Think of it like having a team of specialized sub-models that activate only when needed, rather than running one massive model for every task. It's computationally elegant in a way that Western AI labs have largely dismissed.

Financial Analysis

The financial implications here are staggering, and they completely upend the current AI investment thesis. The entire Western AI buildout is predicated on the assumption that you need massive capital expenditure to compete. We're talking about Microsoft, Google, and Meta each planning to spend dollar 50-75 billion annually on AI infrastructure.

OpenAI is talking about needing over a trillion dollars in compute spending through 2035. But if Moonshot can deliver comparable - or superior - performance for 1/20th the training cost, that entire investment thesis crumbles. Suddenly, the moat isn't about who can raise the most capital or build the biggest data centers.

The moat becomes architectural innovation and engineering efficiency. Look at the market reaction to OpenAI's CFO mentioning potential government backing - dollar 500 billion in market cap gone in hours. Investors are already nervous about the capital intensity of this industry.

Now imagine if Chinese competitors can deliver similar capabilities at a fraction of the cost. The return on investment calculus for Western hyperscalers gets very uncomfortable very quickly. This also has massive implications for the AI startup ecosystem.

If you don't need hundreds of millions in compute budget to train competitive models, that dramatically lowers the barriers to entry. We could see an explosion of well-funded Chinese AI startups - and they're already operating in an environment with 1 million AI researchers working around the clock, compared to maybe 20,000 in Silicon Valley, according to Jensen Huang. From a business model perspective, this enables completely different pricing strategies.

If your inference costs are 90 percent lower because you're running INT4 models on consumer hardware, you can undercut Western competitors on price while maintaining better margins. That's a dangerous combination.

Market Disruption

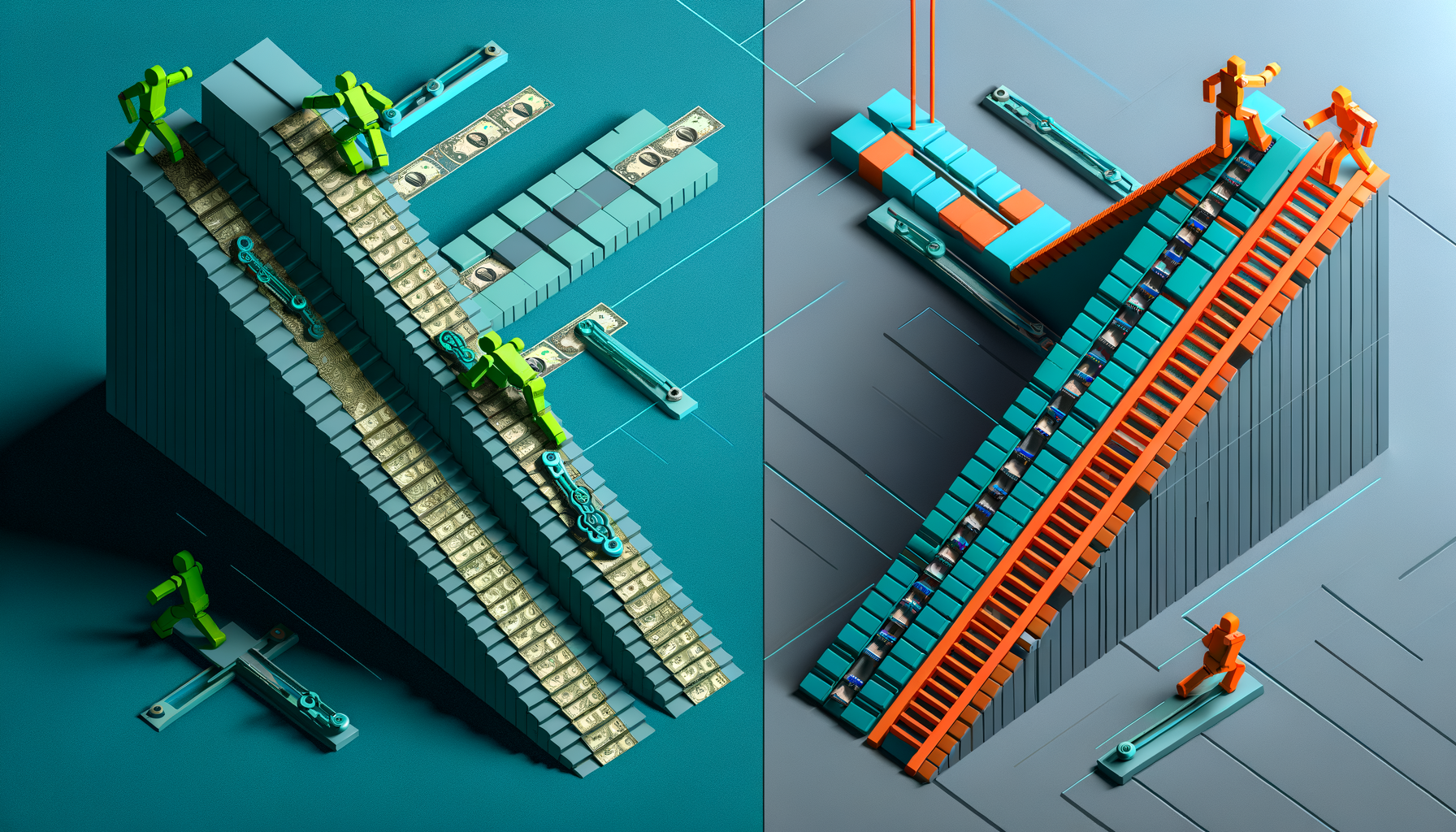

We're witnessing the AI race split into two fundamentally different approaches, and this has profound implications for market structure. Silicon Valley is building bigger - chasing AGI through scale. China is building smarter - optimizing for efficiency and deployability.

Here's why that matters: the "scale at all costs" approach has natural limits. You eventually hit constraints around power availability, chip manufacturing capacity, and capital. But the efficiency approach scales differently.

Once you've figured out how to get comparable performance at 1/20th the cost, you can deploy everywhere. Edge devices, embedded systems, consumer products, industrial applications. Think about what happened in solar panels or batteries.

China didn't win those markets by building the most advanced technology. They won by relentlessly optimizing for cost and manufacturing efficiency, then deploying at massive scale. We're seeing the same playbook here.

The competitive dynamics get really interesting when you consider deployment scenarios. Western AI companies are focused on cloud services - you use their models through APIs. But if Chinese models can run on consumer hardware with comparable performance, they can be deployed locally, on-premise, in air-gapped environments, in countries with data sovereignty requirements.

That's a fundamentally different value proposition. For established players like OpenAI, Google, and Anthropic, this is an existential threat to their business models. Their entire strategy assumes that cutting-edge AI requires their massive infrastructure.

If that assumption breaks, they're just expensive API providers competing against cheaper, more deployable alternatives. The geopolitical dimension is also critical. The US has implemented extensive export controls to restrict China's access to advanced chips, assuming this would maintain American AI leadership.

But Moonshot's achievement suggests these controls might be counterproductive - forcing China to innovate around constraints rather than just buying Western technology. They're developing entirely new architectures that don't depend on the latest Nvidia chips.

Cultural and Social Impact

This development reflects and reinforces a profound cultural difference in how East and West approach technological development. Silicon Valley has this almost religious belief in Moore's Law thinking - that progress comes from exponential scaling. Bigger models, more parameters, more compute.

It's a very American approach: overwhelming resources applied to ambitious goals. China's approach reflects a different engineering philosophy - one that prizes efficiency, resourcefulness, and working within constraints. It's the same mindset that built their high-speed rail network or their renewable energy infrastructure.

Optimize relentlessly, manufacture at scale, deploy pragmatically. For everyday users and developers, this could democratize AI in unprecedented ways. If you can run GPT-4-class models on a laptop or even a high-end smartphone, that changes everything about adoption patterns.

You don't need cloud credits or API keys. You don't have data leaving your device. You can use AI in bandwidth-constrained environments, in developing countries, in privacy-sensitive applications.

This also shifts the conversation around AI safety and governance. Western AI labs have been arguing that responsible AI development requires massive resources and can only happen in a few well-funded organizations. But if powerful models can be trained cheaply and run locally, that argument falls apart.

You can't control what you can't monitor, and you can't monitor thousands of locally-deployed models. The workforce implications are significant too. The Western AI industry is optimized around a small number of elite researchers at elite institutions with access to massive compute.

But China's model - with a million people working on AI - suggests a different path: distribute the problem across many researchers working with constrained resources. That's a more inclusive model that could reshape how we think about AI talent development globally.

Executive Action Plan

So what should technology executives actually do in response to this? Three concrete recommendations: First, immediately diversify your AI strategy beyond just partnering with Western hyperscalers. If you're currently all-in on OpenAI, Anthropic, or Google's models, you're exposed to a major strategic risk.

Start evaluating and testing open-source alternatives, particularly the Chinese models that are being released. Set up a small team to benchmark performance, test deployment scenarios, and understand the cost-quality tradeoffs. You want optionality here.

In 12-18 months, the landscape might look completely different, and you don't want to be locked into expensive infrastructure that's being undercut by cheaper alternatives. Second, rethink your AI infrastructure roadmap with efficiency as a first-class concern, not an afterthought. Stop assuming that you need massive cloud compute budgets to do serious AI work.

Investigate quantized models, mixture-of-experts architectures, and local deployment options. The companies that figure out how to deliver AI capabilities at 1/10th the infrastructure cost will have a massive competitive advantage. This might mean hiring different types of ML engineers - people who can optimize for efficiency rather than just throwing more GPUs at problems.

Third, and this is crucial - develop a multi-geography AI strategy that accounts for the bifurcation we're seeing. The world is splitting into different AI ecosystems with different technological approaches, different cost structures, and different regulatory environments. If you're only deploying Western AI models, you're going to struggle in markets where data sovereignty, cost sensitivity, or infrastructure constraints favor Chinese approaches.

You need teams that understand both paradigms and can deploy the right solution for each market context. The bottom line is this: the assumptions that have driven AI strategy for the last three years are being fundamentally challenged. The companies that recognize this shift early and adapt their strategies accordingly will have enormous advantages over those that remain committed to the "scale at all costs" paradigm.

This isn't just about China versus America - it's about different fundamental approaches to building intelligence, and both approaches are going to coexist and compete. Your job as an executive is to make sure your organization can thrive in that more complex, multipolar AI landscape.

Never Miss an Episode

Subscribe on your favorite podcast platform to get daily AI news and weekly strategic analysis.