Microsoft Launches Homegrown AI Models, Signals OpenAI Independence

Episode Summary

Your daily AI newsletter summary for August 30, 2025

Full Transcript

TOP NEWS HEADLINES

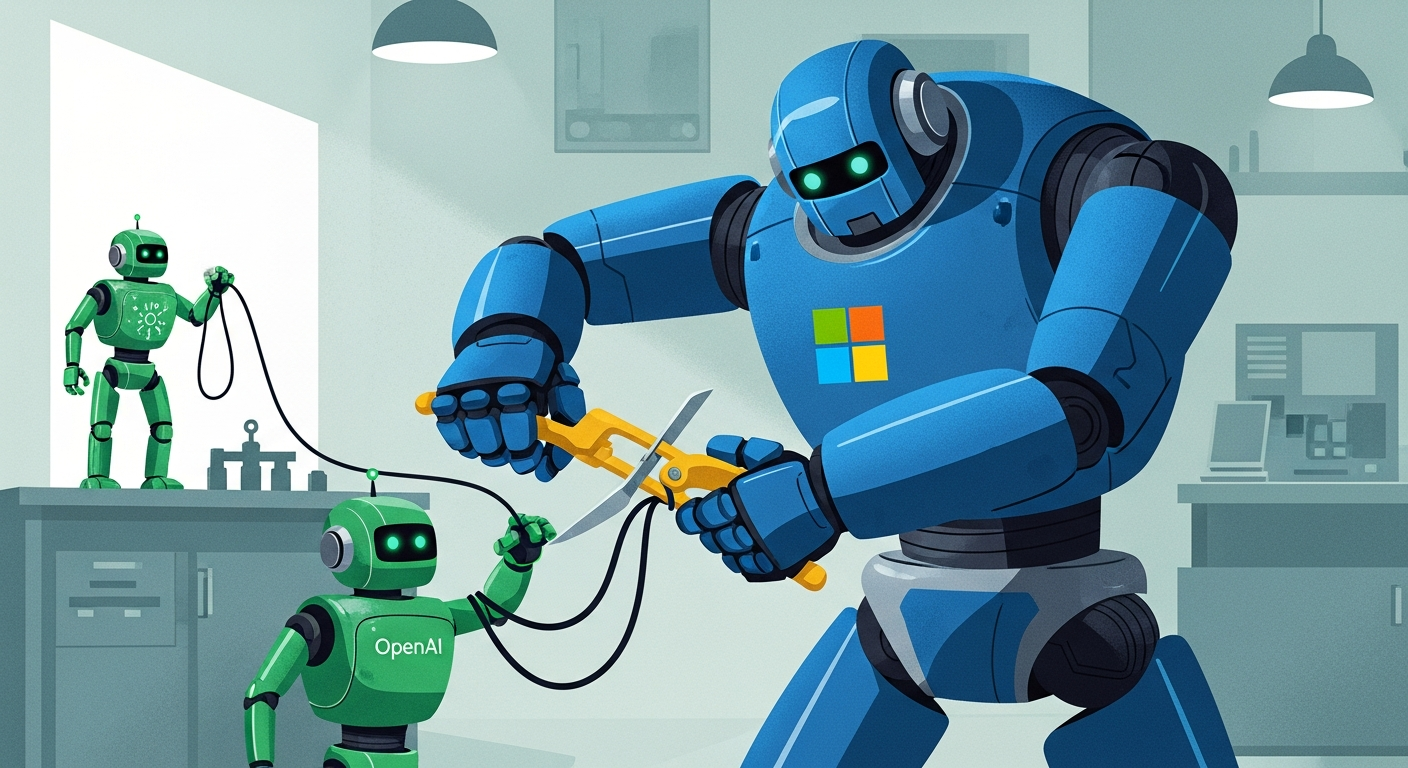

Microsoft just threw a curveball at their OpenAI partnership by releasing their first fully homegrown AI models - MAI-Voice-1 and MAI-1-preview.

This marks a significant shift from years of complete reliance on OpenAI's technology, with CEO Mustafa Suleyman claiming these models are "up there with some of the best in the world." OpenAI made voice AI development dramatically easier with their general availability launch of gpt-realtime, a speech-to-speech model that can handle complex conversations, detect nonverbal cues, and even process images mid-conversation.

It achieves 82.8 percent accuracy on audio reasoning benchmarks, up from 65.6 percent in previous versions. xAI entered the coding assistant arms race with Grok Code Fast 1, a specialized model optimized purely for speed and cost at just 20 cents per million input tokens - that's roughly 90 percent cheaper than competitors.

They're temporarily offering it free through partnerships with GitHub Copilot and Cursor.

Meta is pushing hard to release Llama 4.5 before year-end, marking one of the first major projects from their restructured Meta Superintelligence Labs.

This accelerated timeline suggests they're feeling competitive pressure from the rapid pace of model releases across the industry.

Anthropic quietly switched from opt-in to opt-out for using customer conversations to train their AI models, forcing users to manually disable data usage by September 28th.

This policy reversal highlights the ongoing tension between AI improvement and user privacy.

Cohere launched Command AI Translate, claiming state-of-the-art performance across 23 business languages with a key differentiator - companies can deploy it entirely on their own servers, keeping sensitive translations completely offline and secure.

DEEP DIVE ANALYSIS

Let's dive deep into Microsoft's homegrown AI announcement because this fundamentally reshapes one of the most important partnerships in tech. What we're witnessing isn't just a product launch - it's Microsoft declaring independence from OpenAI, and the implications are massive.

Technical Deep Dive

: Microsoft's MAI-Voice-1 can generate a full minute of speech in under a second, which is genuinely impressive from a computational efficiency standpoint. The underlying architecture likely leverages their massive Azure infrastructure and custom silicon investments they've been making for years. MAI-1-preview, their text model, was trained on around 15,000 NVIDIA H100 GPUs - significantly fewer than competitors like GPT-4 or Claude, suggesting they've achieved efficiency gains through better training methodologies or architectural innovations.

The fact that they're calling it a "mixture-of-experts" foundation model tells us they're using the same scaling techniques as industry leaders, but with their own secret sauce.

Financial Analysis

: This move has enormous financial implications. Microsoft has invested over 13 billion dollars in OpenAI, but every API call their customers make through Copilot has been generating revenue for OpenAI, not Microsoft. By bringing model development in-house, they can capture the full margin on AI services - we're potentially talking about billions in annual revenue that was previously flowing to their partner.

The training costs for MAI-1 were likely in the tens of millions, but that's a one-time investment versus ongoing per-token payments to OpenAI. For Microsoft's stock price, this reduces their dependency risk and positions them as a true AI platform, not just OpenAI's cloud provider and distributor.

Market Disruption

: This creates a fascinating three-way dynamic in enterprise AI. You now have Microsoft with homegrown models, Google with Gemini, and OpenAI potentially losing their biggest enterprise distribution channel. For customers, this means more options but also more complexity in choosing AI providers.

Microsoft's enterprise relationships give them a massive advantage here - CIOs who already run on Office 365 and Azure now have a seamless, integrated AI solution without vendor dependencies. This could accelerate enterprise AI adoption because it removes the "who do we trust with our data" question that many large companies struggle with.

Cultural and Social Impact

: This shift represents the maturation of the AI industry from partnerships of necessity to true competition. In 2019, Microsoft partnered with OpenAI because they didn't have the AI talent or infrastructure. Now they've built both.

This pattern will repeat across the industry - we'll see fewer AI partnerships and more vertical integration as companies realize AI is too strategic to outsource. For developers and businesses, this means more choice but also platform lock-in decisions that will define the next decade of technology strategy.

Executive Action Plan

: Technology executives should immediately audit their AI dependencies and costs. If you're heavily invested in OpenAI's ecosystem, you need contingency plans as Microsoft may prioritize their own models in future Copilot updates. Second, this is the perfect time to negotiate with AI providers - Microsoft's entry into the model space creates pricing pressure that benefits customers.

Start pilot programs with multiple AI providers now while you have leverage. Third, consider the infrastructure implications - Microsoft's ability to offer integrated AI, cloud, and productivity tools in one package will appeal to CFOs looking to simplify vendor relationships. If you're not already evaluating how Microsoft's integrated AI strategy fits your technology roadmap, you're behind the curve.

Never Miss an Episode

Subscribe on your favorite podcast platform to get daily AI news and weekly strategic analysis.